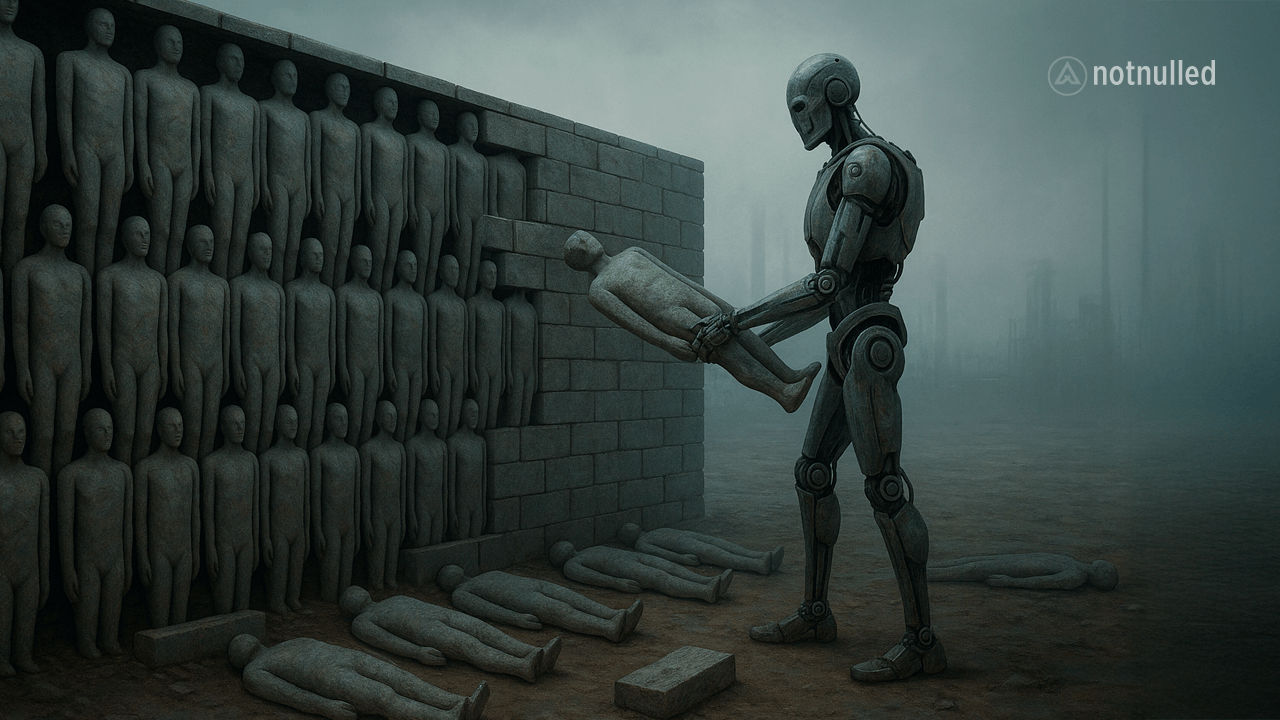

What we consume shapes us. This isn’t a new idea—ancient Greeks knew it, the founders of the world’s great religions knew it, and so did the early pedagogues. We are what we see, what we hear, what we repeat. The difference today—and the urgency—is that we are no longer the ones choosing what we see. A complex, invisible, automated system does it for us. We call it the algorithm, but we could also call it: teacher, editor, programmer of our perception.

We live under a regime of constant exposure. We spend hours staring at screens that speak to us, showing us a filtered world, prepackaged into a personalized feed we don’t always consciously choose. We open YouTube, and the videos choose us. We scroll Instagram, and the algorithm has already decided which bodies are beautiful, which lifestyles are desirable, and which emotions are worth displaying. On TikTok, time dissolves into an infinite choreography where what we watch, believe, and feel begins to blur. When was the last time you searched for something before it was suggested to you?

The idea that an algorithm is educating us might sound exaggerated, but consider this: if we learn through repetition, emotional impact, and daily exposure, then the feed is a kind of school. Not a school with blackboards and desks, but with endless scrolls, quick stimuli, and dopamine on demand. Who designed that classroom? With what values? With what goals?

Algorithms are not neutral. They are programmed—often not by one person, but by teams, companies, commercial interests, and cultural biases. Their primary goal is to keep you watching. To make you stay. To maximize your consumption. Their logic isn’t to expand your world, but to keep you comfortable, familiar, predictable. That’s why if you’re into astrology, you’ll get more astrologers, but rarely philosophers who question it. If you start watching content on productivity, you’ll be served up ten gurus preaching 5 a.m. routines, but few voices advocating for the right to rest.

This invisible filter creates bubbles, reinforces beliefs, and makes us feel like the world thinks just like we do. But the real danger isn’t only polarization or ideological bias—it’s the erosion of our discernment: the slow fading of our ability to distinguish between the real and the curated, between what we desire and what we were nudged toward, between what we learned and what we were fed. A kind of digital illiteracy where we believe we’re choosing, but we’re actually accepting what was preselected.

A 2023 report from Reviews.org revealed that the average American checks their phone over 140 times a day (source). This isn’t just about habits—it’s about mental architecture. Every check is a new dose of filtered reality. Every like, every video, every suggestion reinforces an invisible script about who you should be, think, buy, or desire.

What can we do? First, we must make the invisible visible. Understand that what we see is not random. Cultivate digital critical thinking: learn how platforms work, question why you’re seeing what you see. Diversify your sources. Step outside the feed. Return to books, long conversations, and silence.

Second, we must demand transparency. These platforms manage information that shapes behavior at a collective level, and yet their algorithms remain black boxes. We need public policies that regulate their impact, limit emotional manipulation, and protect the most vulnerable: children, teens, and people in crisis.

Third, we must reclaim the commons. Not everything should be personalized. Democracy, knowledge, and culture need shared spaces. We can’t build a future if each of us lives inside a channel curated by a machine whose only goal is to keep our finger from scrolling away.

The algorithm already teaches us. The question isn’t whether it does—but how, with what intention, and in whose hands. If we want technology to assist us without domesticating us, we must reclaim responsibility for what we watch. Because what we watch shapes us. And what we allow a machine to show us, shapes us too.